As nicely pointed out in [1], responsible cybersecurity research and more specifically, vulnerability disclosure, is a two-way street. Vendors and manufacturers[2], as well as researchers, must act responsibly.

According to recent research [3] [4] [5], most vendors and manufacturers in robotics are ignoring security flaws completely. Creating pressure on these groups towards more reasonably-timed fixes will result in smaller windows of opportunity for cybercriminals to abuse vulnerabilities. This is specially important in robotics, given its direct physical connection with the world.

Coherently, vulnerability disclosure policies establish a two-way framework for coordination between researchers and manufacturers, and result in greater security in robotics and overall improved safety[3:1].

In other words:

Coordinated vulnerability disclosure aims to avoid 0-days. A prompt mitigation is key.

Preliminary work on Vulnerability Diclosure Policies (VDP) in robotics inclued the Robot Vulnerability Database (RVD) VDP (link). This policy is strongly in line with a desire to improve the robotics industry response times to security bugs, but also results in softer landings for bugs marginally over deadline. More recent activity of VDPs within the Robot Operating System (ROS) community happened first at the here, here and ultimately landing into this PR.

Notice: This article touches into sensitive topic and provides an intuition into what I believe are best practices. The content in here is for informational purposes only. The author should thereby not liable for any damages of any nature incurred as a result of or in connection with the use of this information.

How does the process work?

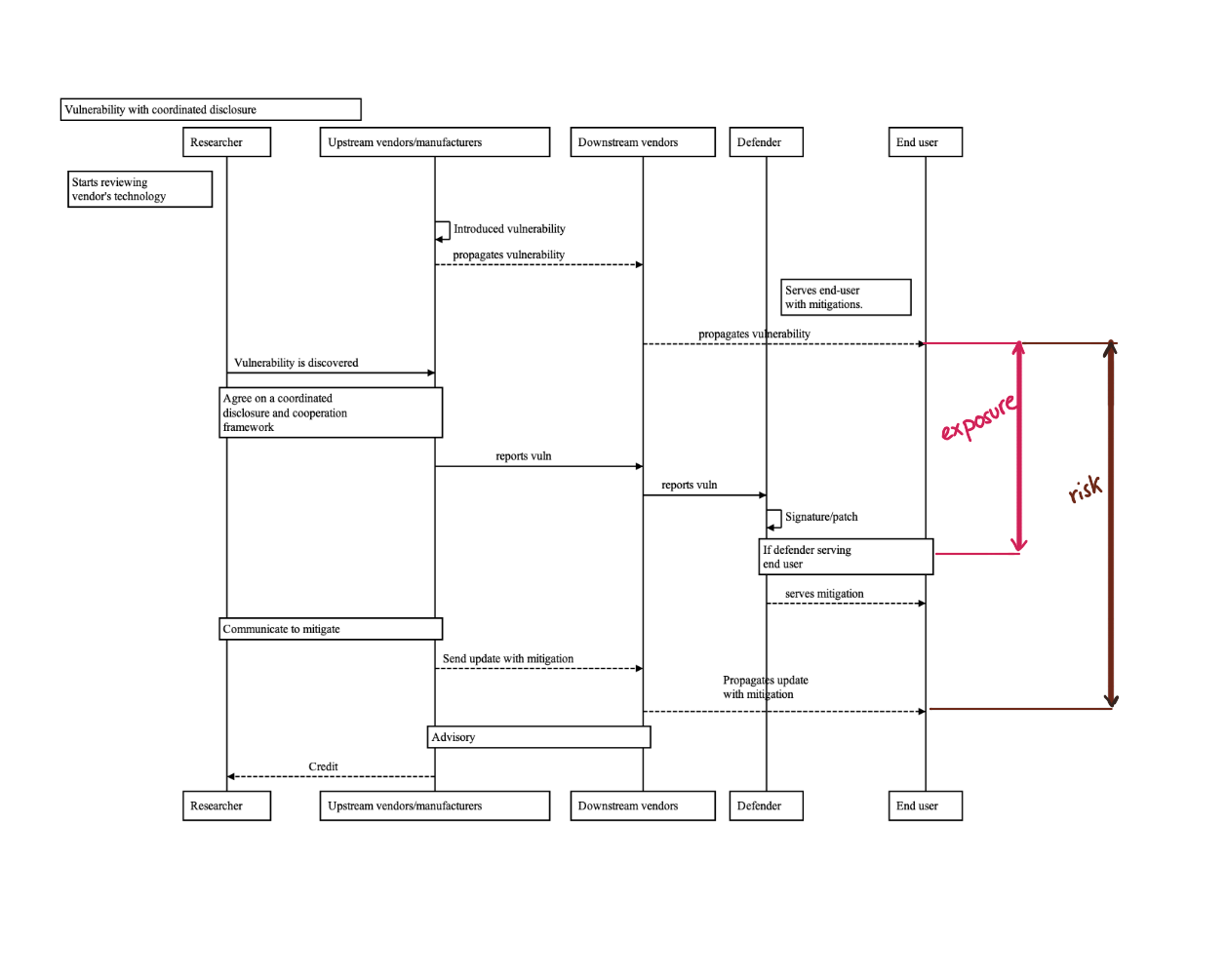

The ideal and most common case is depicted below:

This scenario is one of the several the study cases covered in [6]. If you wish to learn more about coordinated disclosure in the robotics environment (which will generally always involve multi-party), I highly encourage to read [6:1] and [7], including its resources.

Note the following:

exposuretime frame: is managed, caused and fully controlled by the vendor or manufacturer, it's up to them to notify the corresponding entities and/or defenders to provide a mitigation via signatures, patches or related.risktime frame: is managed by the vendor or manufacturer. Often, shows how fast and security-ready a particular technology is.

Bottom line: it's is the manufacturer's responsibility to try and minimize exposure and risk time frames. Researchers and end-users can often do very little to make both of these periods shorter. Vendors caring more about security will present smaller exposure and risk time frames.

Vendors caring more about security will present smaller

exposureandrisktime frames.

A troubling "coordination"

The outlook seems rather clear, researchers should agree with vendors and manufacturers on a disclosure framework, deriving into a VDP.

Ideally, the particularities of each vulnerability should be considered individually and the disclosure framework should be flexible enough to accomodate different severities. More severe flaws should impose a higher pressure on the vendor or manufacturer, so that end-users are protected faster. Vulnerabilities affecting a third-party software component that presents no exploitability on a first look can't be considered at the same level as a remote code execution vulnerability in a robot operating in a healthcare environment. The reality is different though.

Vulnerabilities affecting a third-party software component that presents no exploitability on a first look can't be considered at the same level as a remote code execution vulnerability in a robot operating in a healthcare environment.

Often, researchers are forced to accept a pre-existing VDP which does not meet their particular scenario. Such situation has traditionally happened by either law suits (without legal base in most of the cases) or by exercising peer-pressure on researchers and publicly discrediting their work through communication actions (Note it's often the vendors and manufacturers the one controlling communications via commercials). This has been happening for the last 20 years starting with big software corporations opposing cybersecurity research and demonizing it. More importantly, this is already happening in robotics. The misuse of communication to silence and exercise social peer-pressure on security researchers is likely one of the reasons why currently, the general public, does not differentiate between cybercriminals and hackers.

The misuse of communication to silence and exercise social peer-pressure on security researchers is likely one of the reasons why currently, the general public, does not differentiate between cybercriminals and hackers.

There're hundreds of documented cases where well known security researchers have described their experiences collaborating with big or small companies. [8] displays one of such. Google security research group indirectly acknowledges this via their following paragraph from [1:1]:

We call on all researchers to adopt disclosure deadlines in some form, and feel free to use our policy verbatim (we've actually done so, from Google's) if you find our record and reasoning compelling.

It's ultimately the researcher's duty to decide on which disclosure deadlines they accept. Of course this doesn't mean they shouldn't listen to the needs of the vendors or manufacturers. Coordination is key. Security research is a two-way street. The final goal is to reduce the number of 0-days.

Who owns cybersecurity research results?

As a roboticist working on cybersecurity research for robots, I'm lucky to work side-by-side with people with interesting backgrounds, including some biologists. According to what I've learned, If a celullar biologist finds a new species, it's commonly accepted that such discovery belongs to him or her (he may even generally name it after himself!). Similar situations happen across other areas and domains, but traditionally not in security. This is nicely introduced in [8:1] by César Cerrudo. The default scenario is the following one:

- Researcher discovers a security vulnerability.

- Researcher responsibly reports such vulnerability to the corresponding vendor.

- Vendor may consider (or not the vulnerability). In some cases, vendor enters into a cooperation (often not remunerated) with such researcher under confidentiality agreements that oblige the researcher to not disclose anything unless the vendor consents it.

- Vendor mitigates (or not) such flaw and eventually and –in some cases years after– decides to disclose the vulnerability and credit the researcher.

Cybersecurity research results are owned by the researcher.

Cybersecurity research results are owned by the researcher. She or he deserves all the credit for her original work. The associated Intellectual Property (IP) for the discovery should follow similarly. It's of course the researcher's duty to act ethically, and establish the right procedures to ensure her discovery is used ethically.

A quick look into the security advisores of any vendor of software robot components can give you an intuition of how long it takes for a vendor to react. The norm isn't below 6 months and in many cases, historically, we can see disclosures after many years. Is the researcher who signs a Non-Confidentiality-Agreement (NDA) acting ethically allowing the vendor to expose (potentially) thousands of users over a many-year period? Is the vendor who forces the researcher to sign an NDA to "discuss collaborations" and later avoids prompt reaction and disclosures ethical?

The ethics behind vulnerability disclosure in robotics

As with most things in life, context matters. Arguably, the most affected group is the end-user. Let's consider the previous questions as case studies and argue about them always considering the end-user viewpoint (neither the researcher's nor the vendor's):

Case study 1: Is the researcher who signs an NDA acting ethically allowing the vendor to expose (potentially) thousands of users over a many-year period?

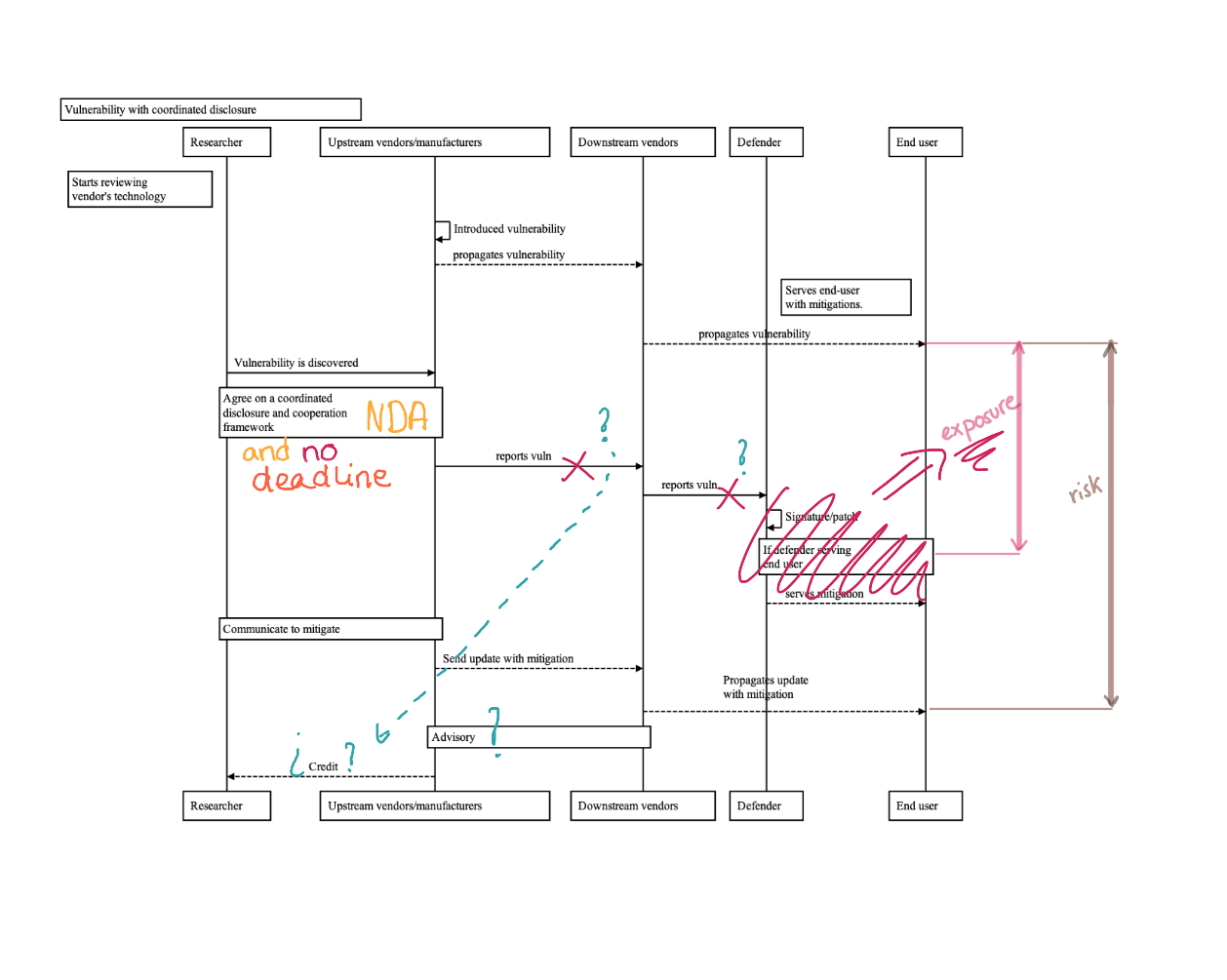

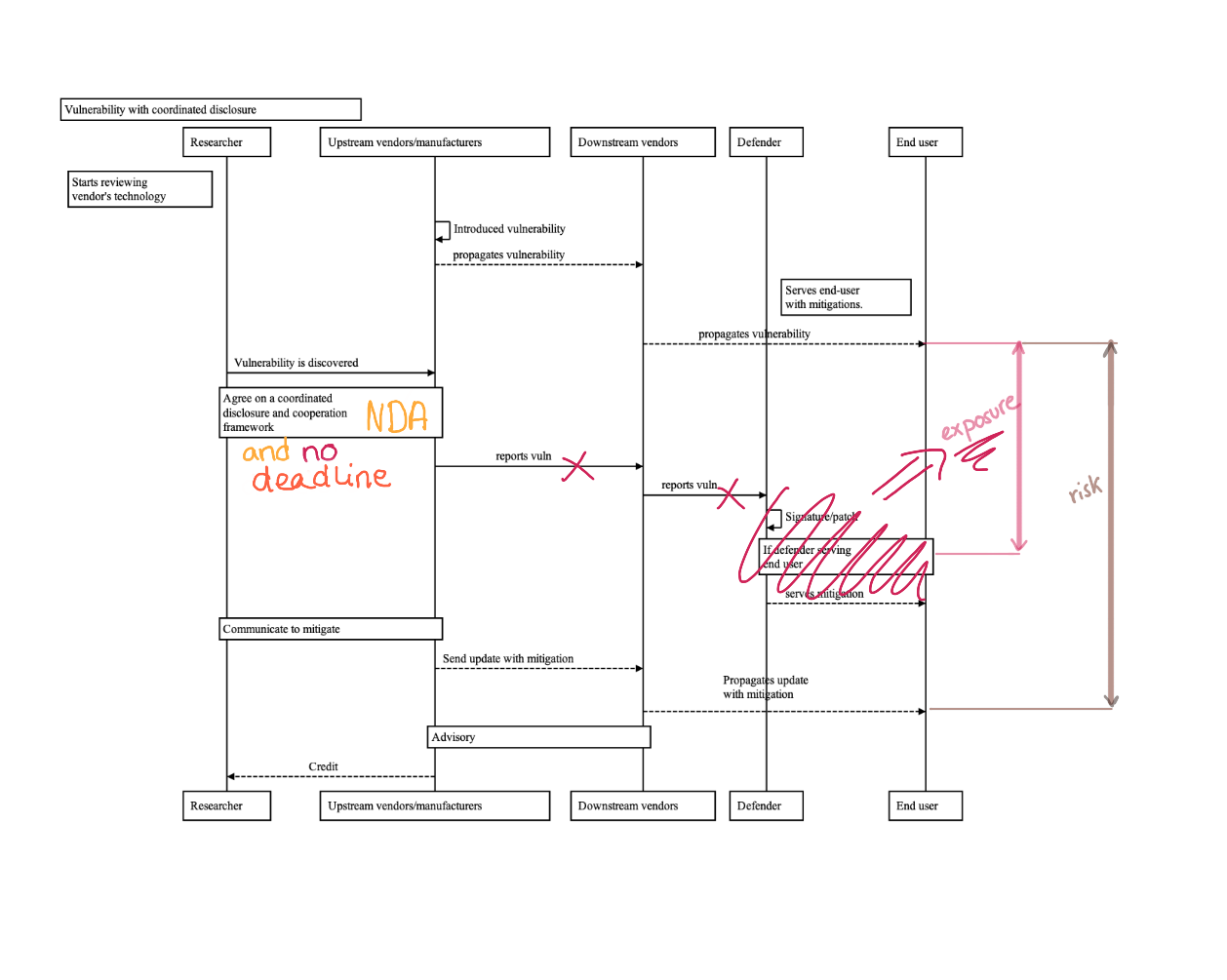

Let's analyze the following dynamics graphically:

Often, from the moment an NDA is in place and signed, the upstream vendor or manufacturer manages the timing. From that point on, disclosures are under the control of the the vendor. Will the company ever disclose it crediting back? Will they do it responsibly and with a reasonable time frame? There're no guarantees for the security researcher and coherently, if the security researcher accepts confidentiality agreements without any deadline, it wouldn't be acting ethically from the end-users viewpoint since the flaw could remain present for years.

In other words, the exposure time is not under the control of the researcher anymore and could get extended as much as the vendor desires. This is an extremely common case in cybersecurity, one that I've faced repeatedly already in robot cybersecurity.

Case study 2: Is the vendor who forces the researcher to sign an NDA to "discuss collaborations" and later avoids prompt reaction and disclosures ethical?

This case is similar but from the vendor's angle. Accordingly, the vendor would not be acting ethically from the end-users perspective unless they commit to responsible deadline that protects customers.

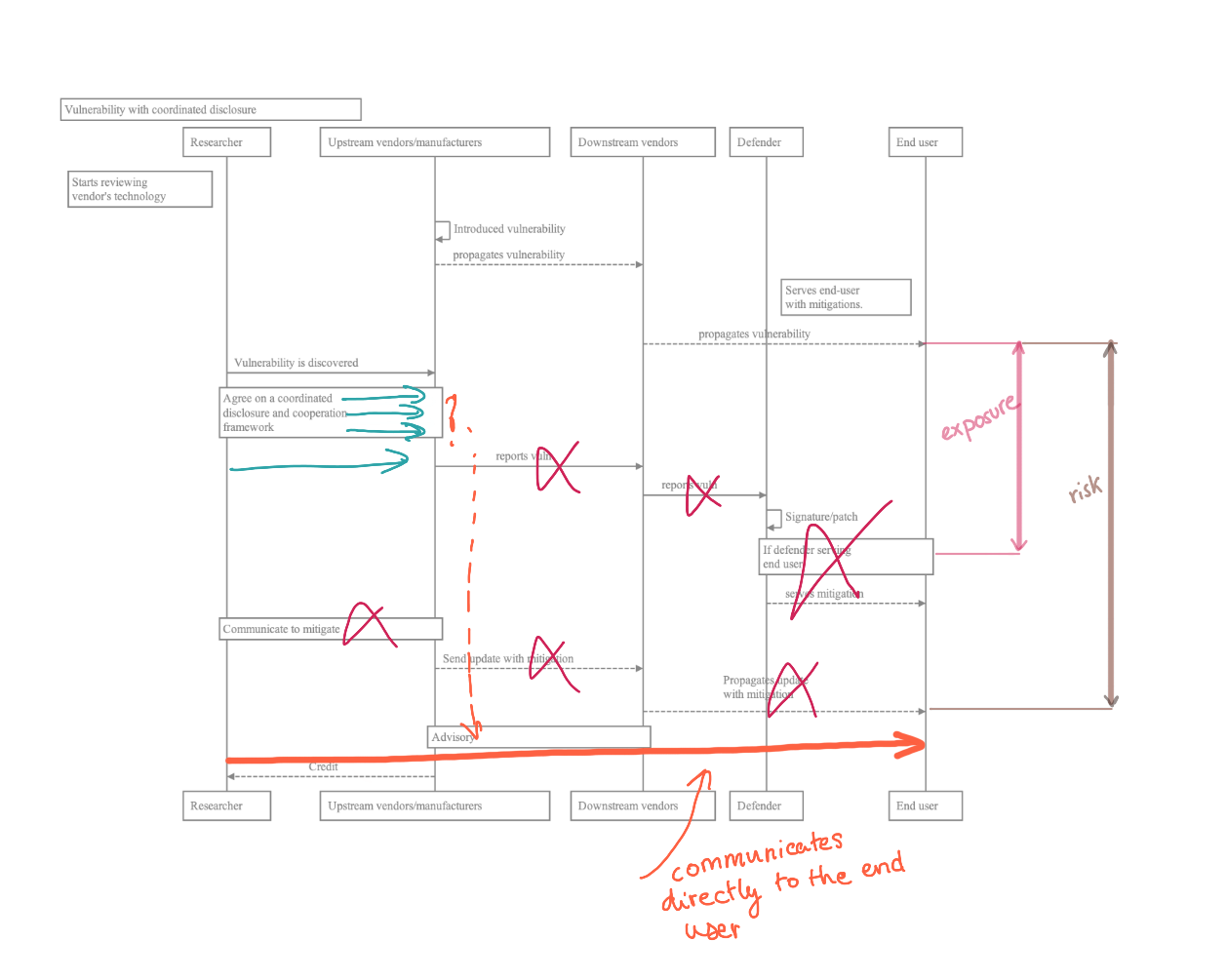

Case study 3: Is the researcher who –after responsibly attempting to coordinate with the vendor (maybe repeatedly)– decides to disclose its findings acting ethically?

Yes, it is, provided the researcher seeks best interest of end-users.

After repeated iterations, maybe via different channels, after having ensured that the vendor is aware if there're still no responses, from the end-user ethics viewpoint, it's the researcher's duty to disclose the flaw and allow end-users to demand proper service and fixes.

This is more common than it appears. In fact, https://news.aliasrobotics.com/week-of-universal-robots-bugs-exposing-insecurity/. In this case, Alias Robotics decided to disclose dozens of vulnerabilities from Universal Robots after not managing to coordinate with them on mitigation.

If you'd like to read more about the status, refer to:

What if the researcher doesn't only look for end-users interests but also his/her own? E.g. monetary?

Case study 4: Is the researcher who releases publicly flaws to end-users acting ethically?

Depends. The right ethical approach (again, from the end-users' angle) is to attempt to reach common ground with the vendor for coordinated disclosure and assist the vendor in the process.

Sometimes, some groups don't have the time or resources (legal, person-power, etc.) to follow up with this procedure and opt for disclosure. In such cases they can head directly to a CERT who will coordinate with the vendor on their behalf. Provided the researcher hands the coordination responsability to the CERT and abides by its deadline (e.g. 20, 45 or 90 days), then the researcher will be acting ethically from the end-users viewpoint, even if it releases the flaws publicly once the deadline is met.

Case study 5: Is the researcher who sells its findings acting ethically?

Depends. Provided all conditions above are met, it is. Why not? Security research needs to be funded. This is one of the core principles behind bug bounty programs, to incentivate researchers to file in bug tickets, rather than selling them to third parties who may or may not use them for ethical purposes (e.g. vulnerability databases might buy flaws, but similarly, criminals or organizatios with malicious intentions do).

Many researchers find themselves constantly in a crossroad. Many vendors and manufacturers don't agree to fund security research, but demand instead flaws to be reported to them under an non-compromising-NDA to "avoid" suing researchers.

Most security researchers have faced this. Researchers need to consider whether they "give away" their findings to the vendor or to the end-users. To me, based on the rationale above, the answer is rather straightforward, the end-user should be considered first.

How Google handles security vulnerabilities. Google. Retrieved from https://www.google.com/about/appsecurity/ ↩︎ ↩︎

By referring to vendors and manufacturers I aim to cover robot software vendors and robot hardware manufacturers. The later is often both. ↩︎

Kirschgens, L. A., Ugarte, I. Z., Uriarte, E. G., Rosas, A. M., & Vilches, V. M. (2018). Robot hazards: from safety to security. arXiv preprint arXiv:1806.06681. ↩︎ ↩︎

Mayoral-Vilches, V., Juan, L. U. S., Carbajo, U. A., Campo, R., de Cámara, X. S., Urzelai, O., ... & Gil-Uriarte, E. (2019). Industrial robot ransomware: Akerbeltz. arXiv preprint arXiv:1912.07714. ↩︎

Vilches, V. M., Juan, L. U. S., Dieber, B., Carbajo, U. A., & Gil-Uriarte, E. (2019). Introducing the robot vulnerability database (rvd). arXiv preprint arXiv:1912.11299. ↩︎

National Telecommunications and Information Administration (NTIA) and FIRST. Guidelines and Practices for Multi-Party Vulnerability Coordination and Disclosure. Version 1.1. Released Spring, 2020. Retrieved from https://www.first.org/global/sigs/vulnerability-coordination/multiparty/guidelines-v1.1 ↩︎ ↩︎

National Telecommunications and Information Administration (NTIA) and FIRST. Guidelines and Practices for Multi-Party Vulnerability Coordination and Disclosure. Version 1.0. Released Summer, 2017. Retrieved from https://www.first.org/global/sigs/vulnerability-coordination/multiparty/guidelines-v1.0 ↩︎

C. Cerrudo. Historias de 0days, Disclosing y otras yerbas. Ekoparty Security Conference 6th edition. Retrieved from https://vimeo.com/16504265. ↩︎ ↩︎